By Matías Guerrero

Today, artificial intelligence is reshaping how we build and operate systems, especially within the field of engineering. From accelerating development cycles to automating tasks, AI is becoming a practical tool in everyday workflows. As AI cloud integration evolves and intelligent agents connect more deeply with platforms like AWS and Azure, cloud environments are starting to behave less like static infrastructure and more like adaptive systems that can work alongside AI in real time.

What is Model Context Protocol (MCP)?

Imagine a translator in a crowded conference: instead of each person speaking different languages and trying to understand each other, the translator creates a common language everyone can understand. In a similar way, MCP acts as a translator, enabling various cloud systems and AI agents to communicate seamlessly, regardless of their native interfaces.

Major cloud providers like Amazon and Microsoft are already embracing this model. They’re beginning to open up their services through MCP-compatible servers, paving the way for AI-native infrastructure. Although both AWS and Azure share the same vision, to make the cloud more intelligent and agent-friendly, they approach it in very different ways.

In this blog, we’ll explore how AWS and Azure are implementing MCP, compare the strengths of their current offerings, and break down what it means for engineering teams looking to supercharge their cloud workflows with AI. Therefore, whether you’re building with agents today or planning for a smarter future, understanding the MCP landscape is becoming essential.

High level overview

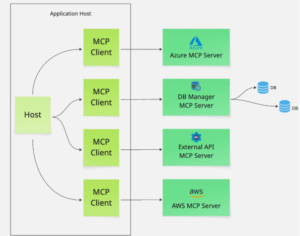

Figure 1: MCP architecture

MCP’s architecture is delineated into three primary components: the host, the client and the server. This modular design ensures scalability, flexibility and ease of integration across different platforms and services.

MCP host

The host represents the application that utilizes the AI agent. Examples include chat applications, integrated development environments (IDEs) or any software requiring AI functionalities. The host is responsible for initiating interactions and often encompasses the client component.

MCP client

Integrated within the host, the client serves as the intermediary between the host and the server. It formulates requests based on user input or application needs and communicates these to the server. In addition, the client processes responses received from the server, facilitating appropriate actions within the host application.

MCP server

The server component is tasked with executing the operations requested by the client. Moreover, it interfaces with various external resources, such as databases, APIs or local files, to perform the necessary tasks. At the end of the cycle, it returns the results to the Client, completing the communication loop.

If you are interested in a deeper dive into how this architecture works in practice—including a step-by-step guide to building your own MCP server—check out our detailed blog post on MCP architecture and server implementation.

MCP servers for cloud providers integration

With the MCP architecture, integrating cloud services like Amazon or Azure is quite simple: just connect to its MCP server and access all the available features without creating separate tools for each service on each agent. One interface, endless possibilities.

Operational workflow

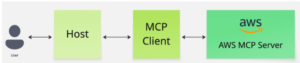

Figure 2: An user interacts with the AWS MCP server

The interaction within the MCP architecture follows a systematic workflow:

- User interaction: A user initiates a request through the host application (e.g., querying customer data).

- Request formulation: The client processes this input and formulates a structured request.

- Server communication: The client sends the request to the server, which identifies the appropriate tools or data sources required to fulfill the request.

- Execution and response: The server executes the necessary operations, retrieves the required data and sends the results back to the client.

- Result delivery: The client processes the response and presents the information to the user through the host application.

AI agents and cloud services unite through MCP

AWS MCP servers: enhancing AI capabilities with AWS services

As part of its push to make the cloud more accessible to AI-native workflows, AWS has introduced a family of Model Context Protocol (MCP) servers.

These AWS MCP servers are designed to give AI agents safe, well-scoped access to a variety of AWS capabilities using standardized protocols. Each server focuses on a specific domain, such as:

- AWS documentation – allows agents to search, retrieve and convert AWS docs to structured responses

- Cost analysis – enables agents to query billing and usage data

- Terraform and CDK – helps generate or validate infrastructure as code

- Amazon Aurora MySQL – allows SQL generation and schema introspection for Aurora MySQL

- AWS Lambda – allows for deployment and function management through guided agent prompts

- Amazon Bedrock knowledge bases – enables discovery and natural language querying of Bedrock KBs

- Amazon Nova canvas – generates text-based or color-guided images with workspace integration

- AWS diagram – generates infrastructure, sequence or flow diagrams from Python code

- AWS CloudFormation – enables CRUDL operations on AWS resources via Cloud Control

- Amazon SNS / SQS – lets agents create topics/queues, send/receive messages and manage attributes

- Frontend – provides documentation and guidance for modern frontend development on AWS

- AWS Location Service – enables geolocation, place search, reverse geocoding and route optimization

- Git repo research – performs semantic search and structure analysis on Git repositories

- Amazon Aurora PostgreSQL – converts questions into SQL and runs queries on Aurora Postgres

- Code documentation generation – auto-generates documentation and diagrams from code repositories

- Amazon Neptune – runs openCypher and Gremlin queries on Neptune databases

The official awslabs/mcp repository and AWS blog series describe MCP as a foundational layer in a broader vision: using agent-based tooling to accelerate cloud adoption, reduce DevOps friction and simplify complex workflows for developers, platform teams and even non-technical users.

Early days: limited docs and community-built solutions

While AWS has published these MCP servers, official documentation is still limited, especially when it comes to using them outside of IDEs. Tools like Microsoft VS Code already support MCP and provide general setup guides (code.visualstudio.com), and clients like Cline and Claude Desktop can be configured to connect to MCP servers. However, there are currently no detailed tutorials on how to use these specific MCP servers (like the AWS Documentation or Lambda server) in standalone agents or custom local workflows.

AWS has announced early-stage support for MCP through blog posts (aws.amazon.com) and provides the core implementations on GitHub (awslabs/mcp). They also offer an SDK as part of Amazon Bedrock Agents, which simplifies working with MCP, but explicitly states that developers not using this SDK must write and maintain custom logic for response handling, control flow and agent state management.

As a result, many developers are building custom scripts and connectors to automate MCP server interactions and plug them into multi-agent systems or local setups. In short, while IDE integrations are already emerging, the ecosystem for standalone or embedded MCP use is still maturing and the community currently relies on GitHub examples and self-built tooling to bridge the gap.

Azure MCP server: Integrating AI agents with Azure services

In parallel with industry trends, Microsoft has introduced the Azure MCP server, a unified and extensible gateway that connects AI agents to Azure services through natural language. Unlike AWS, which offers multiple specialized MCP servers, Azure consolidates multiple service integrations under one unified server architecture.

It acts as a bridge between LLMs and Azure services using smart JSON-based communication tailored for AI agents, natural language-to-API translation for Azure operations, auto-completion and intelligent parameter suggestions, consistent and explainable error handling and a tool-based plugin model that makes it easy to extend or customize.

Built-in tools

Rather than multiple MCP endpoints, Azure MCP offers a set of tools inside one server, each corresponding to a supported Azure service. These tools currently include:

- Azure AI search – List/search services and indexes, inspect schemas and query vectors

- Cosmos DB – Query containers, manage items and execute SQL across NoSQL databases

- Azure Data Explorer – Run KQL queries, inspect clusters, databases and tables

- PostgreSQL – Inspect schema, list databases/tables and run queries

- Azure Storage – Manage blob containers, blobs and table storage

- Best practices tooling – Surface secure and recommended usage patterns for Azure SDKs

- Azure Monitor (Log Analytics) – Run KQL across workspaces, inspect log schemas

- Azure Key Vault – Manage and retrieve keys

- Azure App Configuration – Handle key-values and configuration settings

- Azure Service Bus – View queue/topic/subscription metadata

- Azure Resource Groups – List and inspect groups

- Azure CLI + Developer CLI – Execute CLI commands directly via agent prompts

Unified AI integration with GitHub Copilot and custom clients

The Azure MCP server represents a significant advancement in integrating AI agents with Azure services. By consolidating multiple service integrations under a single server architecture, it simplifies the development process for AI-driven applications.

This unified approach enables AI agents to interact with various Azure services, including Cosmos DB, Azure Storage and Azure Monitor, using natural language commands.

However, it’s important to note that the Azure MCP server is still in its initial versions. Currently, integration options are limited, with primary support for GitHub Copilot Agent Mode in Visual Studio Code and custom MCP clients. This narrow integration scope may hinder broader adoption, especially for developers seeking flexibility in their toolchains.

Despite these limitations, the integration process with GitHub Copilot in Visual Studio Code is notably straightforward. Developers can set up the Azure MCP server by configuring a simple mcp.json file within their project workspace. Once configured, GitHub Copilot’s Agent Mode can seamlessly utilize the Azure MCP server, enabling natural language interactions with Azure services directly within the development environment

MCP in action: Practice with AWS and Azure servers

Using the AWS documentation MCP server with Docker and Cursor: A step-by-step guide

Despite the early-stage nature of MCP tooling, it’s already possible to experiment with specific servers in a local setup. One of the easiest to get started with is the AWS documentation MCP server, since it works well in isolated environments.

The AWS documentation MCP server equips AI agents with tools to interact intelligently with AWS documentation. It exposes three main capabilities:

- read_documentation(url) which retrieves a full AWS documentation page and converts it into clean, markdown-formatted text

- search_documentation(search_phrase, limit) which uses the official AWS documentation Search API to find relevant pages based on a keyword or phrase

- recommend(url) which returns a list of related documentation pages to help agents suggest complementary content.

These tools allow agents to extract precise information, answer technical questions and provide helpful references— all grounded in the official AWS documentation.

Before we dive in, make sure you have the following:

- Docker installed and running.

- Cursor installed.

- A working Python environment (optional if you’re just using Docker).

- AWS credentials configured on your machine (for other MCP tools, not strictly needed for documentation MCP).

Step 1: Clone and build the MCP server

Clone the repo and build the Docker image locally:

git clone https://github.com/awslabs/aws-documentation-mcp-server.git cd aws-documentation-mcp-server docker build -t awslabs/aws-documentation-mcp-server .

Once the build is complete, you’ll have a Docker image ready to go.

Step 2: Set up MCP in Cursor

Cursor supports both global and project-level MCP configurations via a JSON file.

In your project folder (or globally via ~/.cursor/mcp.json), add the following under .cursor/mcp.json:

{

"mcpServers": {

"awslabs.aws-documentation-mcp-server": {

"command": "docker",

"args": [

"run",

"--rm",

"--interactive",

"--env",

"FASTMCP_LOG_LEVEL=ERROR",

"awslabs/aws-documentation-mcp-server:latest"

],

"env": {},

"disabled": false,

"autoApprove": []

}

}

}

This tells Cursor to spin up the MCP server using Docker whenever you interact with it inside the IDE.

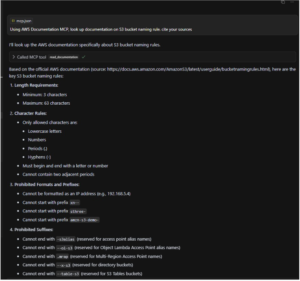

Step 3: Use the tool in Cursor

Once the configuration is in place, open Cursor and prompt the agent with something like:

“Using AWS documentation MCP, look up documentation on S3 bucket naming rules. Cite your sources.”

or

“Recommend content for page https://docs.aws.amazon.com/AmazonS3/latest/userguide/bucketnamingrules.html”

Cursor will detect that the aws-documentation-mcp-server is available and automatically run it via Docker. It will fetch the Markdown content of the relevant AWS docs and present a neat, citeable response.

Figure 3: Cursor user interacts with AWS documentation MCP

Definitely, MCP has the potential to significantly improve how AI agents interact with AWS services—making it easier to query data, manage infrastructure and navigate complex cloud environments through natural language. While still in its early days, this approach lays the groundwork for more intuitive, automated and agent-driven workflows across the AWS ecosystem.

Step-by-step guide: Integrating Azure MCP server with GitHub Copilot in VS Code

Step 1: Prerequisites

Ensure you have the following:

- Visual Studio Code: Installed on your machine.

- GitHub Copilot: Installed and activated in VS Code.

- GitHub Copilot Chat: Installed and activated in VS Code.

- Node.js: Installed on your machine

- Azure Subscription: An active Azure account with appropriate permissions.

Step 2: Install the Azure MCP server manually

Create a .vscode folder in your project directory and add mcp.json with the following configuration:

{

"servers": {

"Azure MCP Server": {

"command": "npx",

"args": ["-y", "@azure/mcp@latest", "server", "start"]

}

}

}

This configuration sets up the Azure MCP server to start with the specified command.

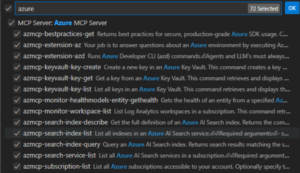

Step 3: Test the Azure MCP server

Open GitHub Copilot in VS Code and switch to Agent mode. You should see the Azure MCP server with its list of tools

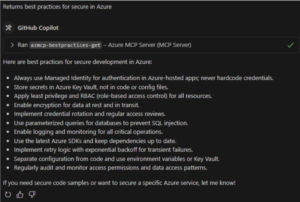

You can create a chat on Copilot and send a message. For example: Return the best practices for secure in Azure

It will run one of the tools of the MCP (azmcp-bestpractices-get) and the information will be in the chat.

One protocol, two approaches: Comparing AWS and Azure’s MCP strategies

While both Amazon Web Services (AWS) and Microsoft Azure are actively investing in the future of AI agents through the MCP, their strategies take very different shapes. Shared vision is clear: enable AI agents to interact with cloud services using natural language, without the need for manual integrations or custom-built connectors. But the execution, tooling and developer experience vary significantly between the two cloud giants.

High-Level Comparison

| Feature | AWS MCP servers | Azure MCP server |

| Approach | Multiple specialized servers | Unified server with modular built-in tools |

| Documentation | Limited; community-driven guidance dominates | Limited; mostly tied to GitHub Copilot |

| Integration scope | IDE-focused; early standalone experiments | GitHub Copilot in VS Code; custom clients supported |

| Service coverage | Fine-grained tools per AWS service | Single server covering a range of Azure services |

| Customization | Requires custom scripting or Bedrock SDK usage | Built-in plugin model; smart error handling and suggestions |

| Tooling maturity | Early-stage, with public GitHub implementations | Early-stage, tightly integrated into Microsoft’s ecosystem |

Understanding the differences

AWS provides multiple dedicated MCP servers, each tailored to a specific AWS service. This modular approach gives developers precise control over capabilities like querying AWS documentation, managing Lambda functions, analyzing costs or generating infrastructure diagrams. However, it also means that setting up multiple integrations may require spinning up and maintaining several MCP endpoints.

In contrast, Azure offers a single MCP server that consolidates multiple tools. This unified design simplifies deployment and reduces the overhead of managing many endpoints. It also includes features like natural language-to-API translation, smart auto-completions and a plugin model that makes it easier to extend functionality.

Both platforms are still in early stages of integration. AWS supports limited IDE integrations (such as with Cursor or VS Code), while Azure is currently focused on GitHub Copilot’s Agent Mode in VS Code. Developers who prefer flexibility or who are building custom agents outside the Microsoft ecosystem may find Azure’s current setup limiting — though promising for the long term.

- AWS favors flexibility through specialization — with dedicated servers per task and service, ideal for modular architectures and custom agent workflows.

- Azure focuses on simplicity and cohesion — delivering broad coverage through one unified interface, designed to work seamlessly with Microsoft tooling.

- Both ecosystems are still maturing, with strong potential but limited out-of-the-box support for non-IDE-based workflows.

In the end, the choice may depend on the existing toolchain and developer preferences. If you need fine-grained control across specific AWS services, AWS’s MCP ecosystem offers depth. If you prefer simplicity, a centralized interface, and native integration with Microsoft tools, Azure’s MCP server is a strong contender.

As the MCP protocol evolves, both cloud providers are laying down foundational infrastructure for a new era of agent-native cloud computing — one where AI doesn’t just run in the cloud, but works with it.

Final thoughts

The MCP protocol represents a significant step towards integrating AI agents with cloud services in a more practical way. Both AWS and Azure are actively developing their MCP strategies with different approaches. While AWS focuses on specialized servers for individual services, offering granular control, Azure aims for a unified server with integrated tools, prioritizing simplicity within its ecosystem.

The future of MCP holds immense potential for automating cloud workflows, reducing DevOps friction, and enabling natural language interactions with complex cloud environments. As the technology matures and documentation improves, we can expect wider adoption and the development of more sophisticated agent-driven workflows.