Exploring NVIDIA Isaac GR00T

By Andrés Escobar, Julio Castillo, Matías Cam and Santiago Ferreiros

Humanoid robotics is profoundly transforming our relationship with machines by enabling more natural and adaptable interactions. In this rapidly evolving field, NVIDIA Isaac GR00T positions itself as an essential innovation that integrates multimodal artificial intelligence capable of processing data from various sources, understanding and replicating human actions, and autonomously adapting to dynamic environments. This advancement represents a crucial shift. It marks a significant leap forward in key sectors such as industry, healthcare, and sustainability, while also addressing global challenges such as labor shortages, customization demands, and the pursuit of efficient and sustainable solutions.

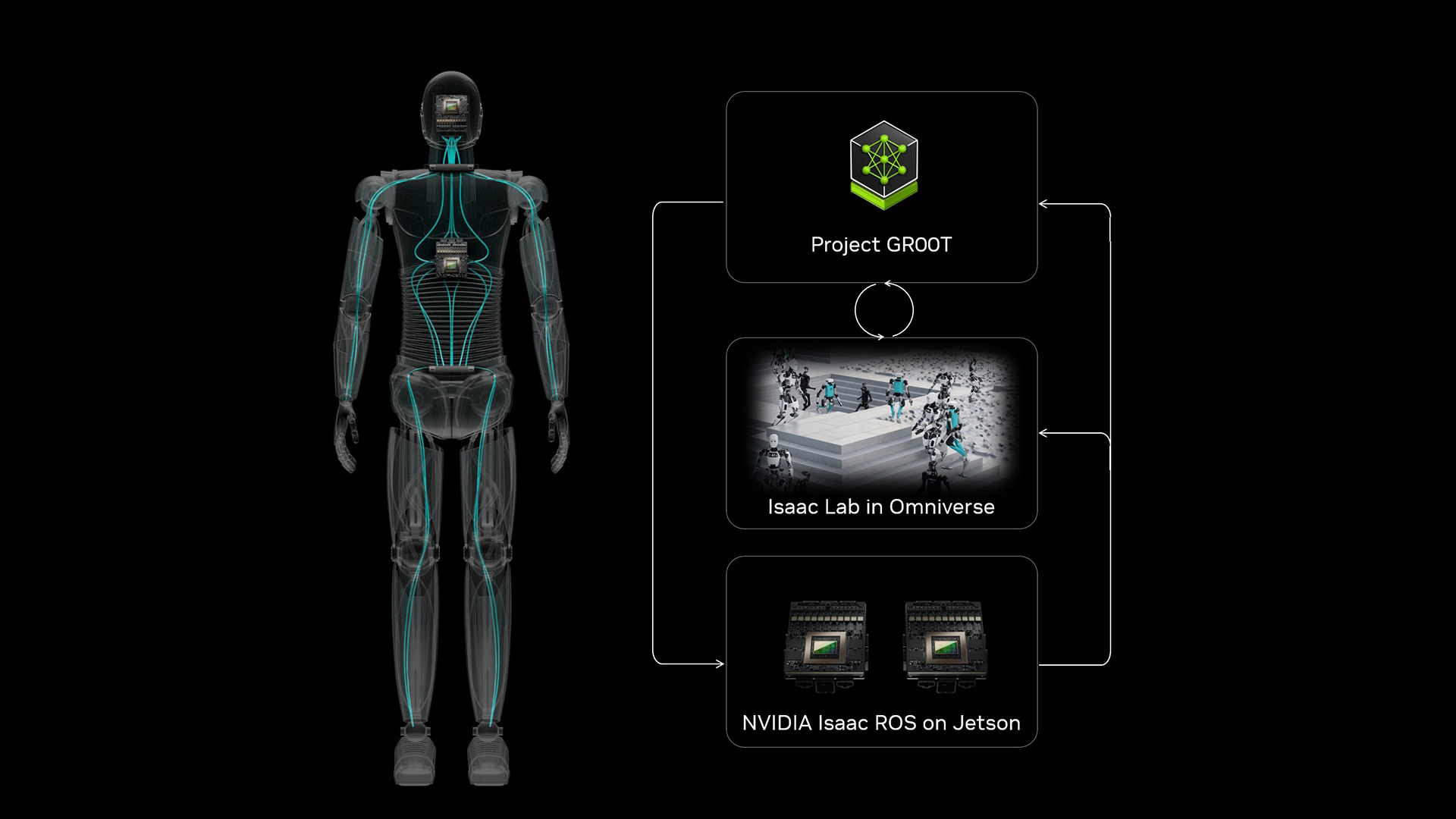

Figure 1: NVIDIA Isaac GR00T ecosystem linking GR00T, Isaac Lab in Omniverse, and Isaac ROS on Jetson for robotics development.

The impact of NVIDIA Isaac GR00T extends across a wide audience, including researchers, technology developers, business leaders, and robotics enthusiasts seeking to harness its transformative potential. Artificial intelligence engineers find in GR00T a unique tool to explore models that allow robots to learn, adapt, and collaborate on complex tasks, while those making strategic decisions in industrial sectors discover an innovative solution that connects artificial intelligence with society’s emerging needs Strategic decision-makers in industrial sectors can leverage GR00T to align AI capabilities with societal demands. This technology not only increases productivity and improves quality of life but also establishes itself as a catalyst for a future where humans and robots collaborate efficiently, thus expanding the possibilities for development in a constantly evolving world.

What is NVIDIA Isaac GR00T?

NVIDIA Isaac GR00T, or Generalist Robot 00 Technology, is an innovative platform that establishes a new framework for humanoid robotics development through the integration of artificial intelligence (AI) and multimodal learning capabilities. Isaac GR00T acts as the “mind” of humanoid robots, enabling them to understand natural language, analyze information from multiple sources such as text, voice, video, and human demonstrations, and acquire essential skills to adapt to complex tasks in various environments.

The multimodal approach of GR00T lies in its ability to process and combine information from different channels, enabling robots to understand contexts holistically and perform actions with a high level of skill and coordination. This capability to integrate multiple modalities not only accelerates learning but also optimizes human-robot interaction, enabling robots to interpret human intentions and demands more intuitively by combining data streams, GR00T accelerates learning while fostering intuitive and seamless human-robot interactions.

GR00T has been designed to support both rapid and continuous learning in simulated and real-world scenarios, allowing robots to develop skills that go beyond mere mechanical execution. As mentioned earlier, thanks to their ability to observe and learn from human actions, robots can acquire practical knowledge that enables them to operate in dynamic environments, responding to real-time challenges with levels of precision and adaptability.

To ensure its success, NVIDIA Isaac GR00T integrates advanced technologies such as:

- Jetson Thor: A specialized system-on-chip (SoC) for complex robotic tasks.

- Omniverse and Isaac Sim: Tools for realistic simulation and dynamic scenario training.

- TensorFlow and PyTorch: Advanced deep learning frameworks for skill development.

- Isaac Lab: An open environment for robotic policy development and learning.

Key Features of NVIDIA Isaac GR00T

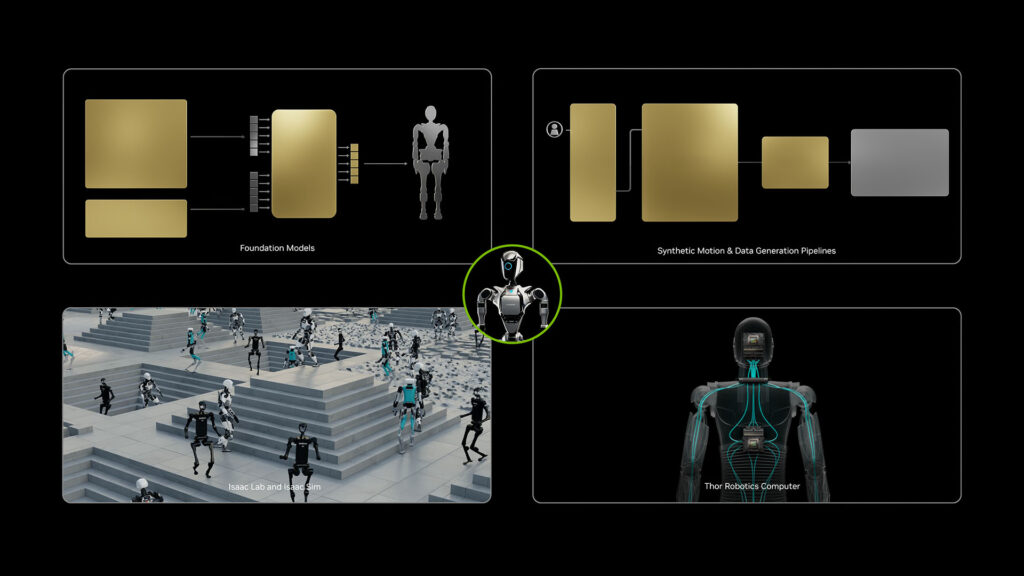

Figure 2: NVIDIA Isaac GR00T’s core components: foundation models, synthetic motion generation pipelines, Isaac Lab and Sim integration, and Thor Robotics Computer.

Reinforcement Learning (RL) Focus

- Combines reinforcement and imitation learning to train humanoid robots efficiently.

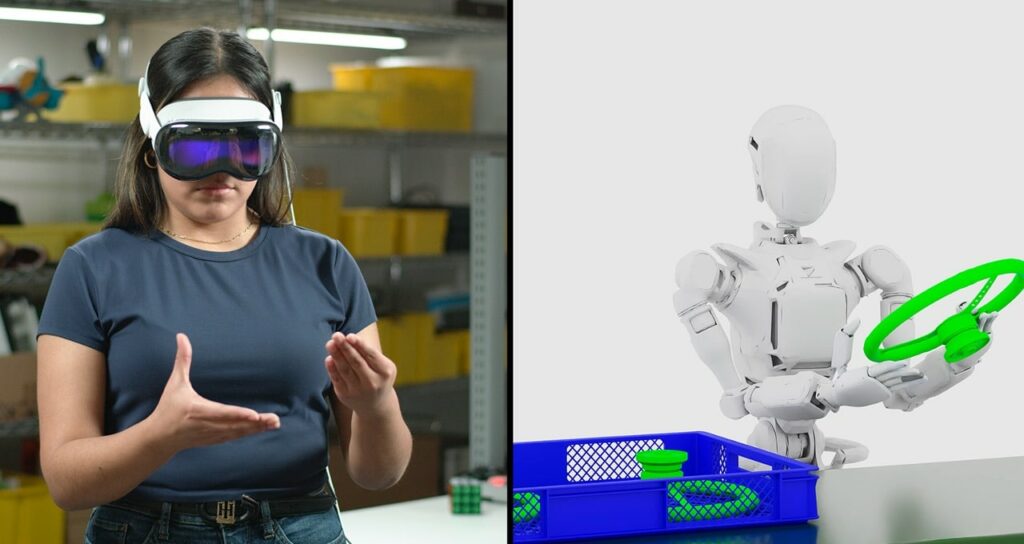

- Imitation Learning: Tools like GR00T-Teleop and devices like Apple Vision Pro capture human actions in a digital twin for robots to replicate in simulations.

- Reinforcement Learning: Supported by Isaac Lab (based on Isaac Sim), enabling robots to learn through trial and error and streamline their training workflows.

Foundation AI Models

- Built on transformer-based architectures using TensorFlow and PyTorch for complex tasks like object recognition, gesture interpretation, speech understanding, and emotional intelligence.

- Powered by the Jetson Thor SoC, featuring a Blackwell-based GPU and a transformer engine for seamless human-robot interaction.

Multimodal Capabilities

- Integrates text, images, videos, audio, and live demonstrations to execute context-specific tasks.

- Uses advanced vision models, language processing, and motor control (e.g., MaskedMimic) to recreate complete human movements from partial descriptions or natural language commands.

Hardware Compatibility

- Optimized to work with NVIDIA GPUs and robotic platforms for high performance in complex tasks.

- The Jetson Thor SoC delivers 800 teraflops of 8-bit AI performance, powered by a high-performance CPU cluster and 100GB Ethernet bandwidth, ensuring robust support for demanding humanoid robotics.

How does NVIDIA Isaac GR00T work?

NVIDIA Isaac GR00T operates as a modular and integrated platform designed to accelerate humanoid robot development through advanced AI, simulation, and synthetic data generation. Its architecture is built to tackle the complexities of real-world robot learning and control by leveraging NVIDIA’s high-performance GPUs and simulation technologies. Below is a detailed breakdown of its architecture and workflows.

Overview of its architecture

NVIDIA Isaac GR00T is composed of several specialized workflows, each designed to address specific challenges in humanoid robot development. These workflows can be used independently or combined for more comprehensive solutions:

- GR00T-Teleop: Provides advanced tools for collecting teleoperated demonstration data:

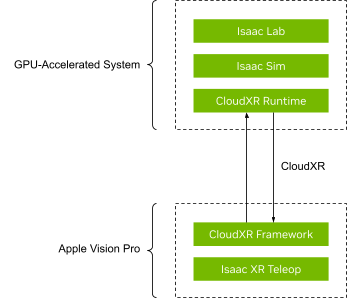

- NVIDIA CloudXR: Connects an Apple Vision Pro headset to a GPU-accelerated system, enabling seamless streaming of actions for humanoid teleoperation. It uses a custom CloudXR runtime optimized for precision and low latency.

- Isaac XR Teleop: Streams teleoperation data to and from NVIDIA Isaac Sim or Isaac Lab, offering developers an intuitive and immersive way to interact with simulated environments using Apple Vision Pro.

- GR00T-Gen: Creates diverse, simulation-ready environments for training robots in tasks like manipulation, navigation, and locomotion. It leverages generative AI models and domain randomization to produce realistic and varied human-centric environments.

- GR00T-Mimic: Facilitates the generation of extensive synthetic motion datasets from limited human demonstrations. This workflow scales data collection efforts through interpolation and simulation, making it a cornerstone for imitation learning.

- GR00T-Dexterity: Offers workflows for fine-grained, dexterous manipulation, using reinforcement learning to train robots for end-to-end grasping and complex object handling.

- GR00T-Mobility: Focuses on locomotion and navigation, providing tools to train generalist models capable of operating across varied and cluttered environments.

- GR00T-Control: Enables whole-body control through advanced motion planning and neural control policies. It supports tasks requiring precision, like humanoid locomotion and dexterous manipulation, with a focus on both teleoperation and autonomous control.

- GR00T-Perception: Integrates multimodal sensing capabilities, such as vision, language, and memory, using tools like ReMEmbR, RT-DETR, and FoundationPose. This suite enhances robot adaptability and situational awareness.

Seamless Integration with the Isaac Ecosystem

Isaac GR00T seamlessly integrates with other prominent NVIDIA projects such as Isaac Lab, Isaac Sim, and Omniverse, enabling researchers and developers to create realistic training datasets, validate policies, and accelerate the development of generalizable robotic behaviors.

Overview of the Isaac Ecosystem

The Isaac ecosystem is NVIDIA’s comprehensive platform for robotics innovation, encompassing tools and frameworks tailored to every stage of robot development. Key components include:

- Isaac Sim: A high-fidelity simulation platform for creating photorealistic environments and testing robot interactions.

- Omniverse: A collaborative design and simulation platform powered by Universal Scene Description (USD), facilitating interoperability and teamwork.

- Isaac Lab: A scalable framework for training and validating robot learning algorithms.

For more information, you can check one of our previous blogs: Exploring NVIDIA Omniverse and Isaac Sim.

Integration with Isaac Sim: High-Fidelity Simulation for Robotics

Isaac Sim serves as the core simulation platform within GR00T’s ecosystem, enabling the creation of highly realistic and diverse training environments. Powered by NVIDIA RTX technology, it delivers photorealistic visuals with precise lighting, reflections, and interactions that closely mimic real-world conditions. Its physics-based simulation ensures accurate modeling of complex robot interactions, such as grasping objects, navigating cluttered spaces, and handling collisions.

Isaac Sim also incorporates domain randomization, introducing variability in textures, lighting, and object placement to enhance the robustness of trained policies. This ensures that robots can generalize effectively when transitioning from simulation to real-world scenarios. Furthermore, its scalability allows workflows like GR00T-Mimic and GR00T-Gen to generate large and diverse datasets required for training humanoid robots.

Integration with Omniverse: Collaborative Design and Simulation

Omniverse complements Isaac Sim by serving as a central hub for designing, managing, and integrating 3D content into simulation environments. Built on Universal Scene Description (USD), Omniverse enables developers to create and manage complex environments with unparalleled scalability and flexibility. Its real-time collaboration tools allow teams to iterate on designs and scenarios efficiently, reducing development time.

In addition, Omniverse integrates generative AI models, supporting GR00T-Gen’s ability to produce diverse and human-centric environments. This combination of design tools and scalable data management ensures smooth transitions between synthetic data generation and training workflows, enhancing GR00T’s overall capabilities.

Isaac Lab: From Synthetic Data to Robot Policies

Isaac Lab plays a pivotal role in transforming synthetic data generated by Isaac Sim and Omniverse into actionable robot policies. Acting as the training hub for NVIDIA GR00T, Isaac Lab supports reinforcement learning (RL), imitation learning (IL), and policy optimization, enabling robots to acquire complex behaviors like dexterous manipulation and human-like locomotion.

Key contributions of Isaac Lab include:

- Synthetic Data Processing: Motion trajectories from GR00T-Mimic and environmental datasets from GR00T-Gen are seamlessly integrated into Isaac Lab’s training pipelines.

- Scalable Training: Leveraging NVIDIA GPUs, Isaac Lab can train thousands of robots simultaneously, dramatically accelerating policy development.

- Sim-to-Real Transfer: Policies trained in simulation are fine-tuned for real-world deployment, overcoming the challenges of transferring knowledge from virtual environments to physical robots.

This integration ensures that synthetic data is not only diverse and high-quality but also effectively utilized to create robust, generalizable robot behaviors.

Figure 5: Training of whole-body humanoid robots in Isaac Lab

Applications and Use Cases

Synthetic Motion Generation for Robot Learning

The NVIDIA Isaac GR00T Blueprint for Synthetic Motion Generation is a well structured, end-to-end pipeline that seamlessly integrates multiple components to generate high-quality synthetic data for humanoid robot learning through imitating learning. The interaction between these components ensures efficient data collection, trajectory generation, and dataset diversity, enabling robots to learn complex tasks through imitation learning. This pipeline is shown and detailed below.

Figure 6: Synthetic Motion Generation Pipeline for Humanoid Robot Learning

Step 1: Data Collection with GR00T-Teleop

The pipeline begins with GR00T-Teleop, where an operator uses tools like the Apple Vision Pro to teleoperate a robot in a simulated environment. Control signals and the robot’s state are streamed to Isaac Lab, capturing detailed human demonstrations. These demonstrations form the foundational data for generating synthetic trajectories. The immersive teleoperation setup ensures the data represents natural and precise human actions.

Step 2: Motion Annotation and Trajectory Generation in GR00T-Mimic

Once the teleoperated demonstrations are collected, they are passed to GR00T-Mimic. This module annotates key points in the collected demonstrations and uses them to generate extensive synthetic motion trajectories. The process includes:

- Trajectory Generation: Producing realistic trajectories that mimic human movements.

- Physics Acceleration: Leveraging Isaac Lab’s accelerated physics engine to refine these trajectories, ensuring they align with real-world dynamics.

- Trajectory Evaluation: Validating the generated trajectories to ensure they meet quality and contextual standards for training.

Step 3: Environment Diversity with GR00T-Gen

The validated motion trajectories from GR00T-Mimic are then sent to GR00T-Gen, which uses Isaac Sim and Cosmos to create diverse simulation environments. GR00T-Gen incorporates:

- Domain Randomization: Adding variability in environmental factors like lighting, textures, and object placement to enhance the generalizability of the data.

- 3D Scene Generation: Cosmos enriches the synthetic datasets by producing highly realistic 3D environments that replicate real-world conditions.

Step 4: Final Output and Integration

The complete pipeline results in a dataset that combines realistic trajectories and diverse environments. The final output can be used to train robot policies in Isaac Lab, enabling robots to adapt to human-centric environments and tasks efficiently.

Potential Real-World Applications

NVIDIA Isaac GR00T is designed to lay the foundation for future integration of humanoid robots into real-world environments. While its current focus is on validating workflows in virtual simulations, GR00T’s ability to generate high-quality synthetic data and train advanced policies positions it as a critical tool for preparing robots to tackle complex, human-centric tasks. These advancements open the door to several potential applications, including:

- Assistive Robotics in Healthcare: Supporting individuals with mobility challenges, assisting with patient care, and reducing caregiver workloads through clinical tasks.

- Industrial Automation: Performing intricate assembly operations and seamlessly adapting to existing manufacturing workflows, enhancing productivity and flexibility.

- Emergency and Hazardous Environments: Navigating disaster zones, rescuing survivors, and safely handling hazardous materials in conditions unsuitable for human workers.

Although these applications are prospective, GR00T’s focus on simulation-driven development and efficient training workflows is paving the way for humanoid robots to address critical challenges and reshape industries in the near future.

Advantages and Limitations of NVIDIA Isaac GR00T

Advantages

- Accelerated Development: Provides a comprehensive list of tools, including robot foundation models, data pipelines, and simulation frameworks, which significantly speed up the development of humanoid robots.

- Scalability: The platform enables the generation of large synthetic motion datasets from minimal human demonstrations, facilitating extensive training without the need for exhaustive real world data collection.

- Advanced AI Integration: By incorporating sophisticated AI and machine learning capabilities, GR00T allows robots to efficiently execute tasks based on multimodal instructions, enhancing their adaptability and performance across various applications.

- Seamless Integration with NVIDIA Resources: GR00T is designed to coexist and integrate easily with other NVIDIA initiatives, such as Isaac Sim, Isaac Lab, and Omniverse. This interoperability enables developers to utilize the full breadth of NVIDIA’s ecosystem, ensuring smooth transitions between simulation, data generation, and training workflows.

Limitations

- High Computational Requirements: Implementing GR00T’s advanced AI models and simulation tools necessitates substantial computational resources, which may pose challenges for organizations with limited access to high-performance hardware.

- Learning Curve: The complexity of GR00T’s integrated tools and workflows may require developers to invest time in learning and adapting to the platform, potentially extending the initial development phase.

Conclusion and Future of NVIDIA Isaac GR00T

NVIDIA Isaac GR00T represents a significant advancement in humanoid robotics, offering a robust platform that integrates advanced AI, scalable data generation, and comprehensive simulation tools. By addressing key challenges in robot learning and development, GR00T paves the way for more intelligent, adaptable, and capable humanoid robots. As the platform evolves, we can anticipate further enhancements in AI integration, user accessibility, and computational efficiency, solidifying GR00T’s role as a catalyst for innovation in the rapidly growing field of humanoid robotics.

Glossary

What Is a Humanoid Robot?

Humanoids are general-purpose, bipedal robots modeled after the human form factor and designed to work alongside humans to augment productivity. They’re capable of learning and performing a variety of tasks, such as grasping an object, moving a container, loading or unloading boxes, and more.

What Is Robot Learning?

Robot learning is a collection of algorithms and methodologies that help a robot learn new skills such as manipulation, locomotion, and classification in either a simulated or real-world environment.

Sources:

- Advancing Humanoid Robot Sight and Skill Development with NVIDIA Project GR00T

- Building a Synthetic Motion Generation Pipeline for Humanoid Robot Learning

- NVIDIA Makes Cosmos World Foundation Models Openly Available to Physical AI Developer Community.

- Marvik’s Blog: Exploring NVIDIA Omniverse and Isaac Sim

- New Marvik’s Blog: Scene-Based Synthetic Dataset Generation (SDG) using Isaac Sim

- Marvik’s Blog: Nvidia Omniverse – Isaac Sim – Introduction to Universal Scene Description

- https://nvidianews.nvidia.com/news/foundation-model-isaac-robotics-platform

- https://medium.com/@nimritakoul01/project-gr00t-generalist-humanoid-robot-00-technology-from-nvidia-80f12f4d0449

- https://blogs.nvidia.com/blog/isaac-gr00t-blueprint-humanoid-robotics/