MoCha’s AI talking characters bring your screenplay to life

By Julieta Carricondo

Video from MoCha project page

Meet MoCha’s AI talking characters, a groundbreaking technology that takes a screenplay (made with speech and text) and, while it might not make the full movie, brings them to life. Not just a floating Zordon head, not just Hotel Reverie, but a full body character with natural movements and context. It’s the kind of tech that feels straight out of the Black Mirror episode, only it’s very real.

Talking characters play a critical role in delivering messages and emotionally engaging audiences. In film, dialogue is a fundamental storytelling tool – iconic lines like “May the Force be with you” from Star Wars show how a single phrase can resonate far beyond the screen (I know, I also read with a Jedi voice). This communicative power also drives a wide range of applications, from virtual assistants and digital avatars to advertising and educational content. MoCha’s AI talking characters enhance this communicative power by enabling more nuanced and expressive digital performances.

On the technology front, video foundation models such as SoRa have made impressive strides in generating visually compelling video from text prompts. Building on this, Mocha introduces advanced speech capabilities that enable more expressive and lifelike talking characters. This advancement is particularly evident in MoCha’s AI talking characters, where detailed prompts can define a character’s traits, emotional state, movements, scene setting, narrative structure and even, facing direction. For example:

“A close-up shot of a man sitting on a dark gray couch… Behind the man are three white cylindrical light fixtures with yellow lights inside them… the man continues to speak to the camera while he holds a lit cigar, the smoke curling gently into the air…”

Result video from MoCha project page

On the other hand, speech-driven video generation methods such as Emo and Hallo3 have been oriented towards facial movements during the speech process, but have been very limited to realistic scenarios.

MoCha bridges the best of both worlds by tackling the complex task of modeling coordinated body movements, facial expressions, and multimodal alignment. This integrated approach sets a new standard for voice-driven video creation.

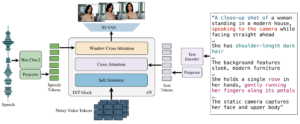

An architecture overview

The core model architecture is built around a diffusion transformer based on an autoregressive decoder that generates a unified sequence of video tokens conditioned by audio/text input and character prompts through cross-attention. It starts with a noisy RGB video and encodes it into a latent space using a 3D VAE to downsample the video spatially and temporally. It then uses self- and cross-attention with the text and audio condition tokens to generate a parallelization of all video frames: a video token corresponds to a local window of audio tokens to align them.

To effectively synchronize speech with video, MoCha introduces a speech-video window attention mechanism. This attention selectively aligns audio features with temporally relevant video frames, ensuring the animation reflects prosodic nuances and speech rhythm.

MoCha enables the generation of conversations between multiple characters through structured prompt templates that include character tags and turn-based dialogue control. The clip generation is the same as single-clip but with a simultaneous process with extra self-attention across tokens to ensure consistency of characters in the multiple clips. The audio signal guides the scene cut moment between clips. To avoid the excess of verbosity and complexity in the prompt, MoCha uses a template with these elements:

- Number of clips: Two video clips

- Characters:

- Person 1: A man inside a heavy-duty armored exosuit with a chainsaw arm stands.

- Person 2: A cyborg woman angry.

- First clip: Person 1 standing in a grassy, wooded area, striking a dramatic pose, mid-action, ready to tear into whatever shark dares to fly his way. The camera gradually zooms in on Person1’s face.

- Second clip: Person 2 blasts flying sharks with her bionic arm cannon as a massive twister through a city skyline behind her.

Training process

MoCha is built on a pretrained 30B diffusion transformer model. To address the limited availability of large-scale speech-annotated video datasets, MoCha adopts a joint training approach that integrates both speech- and text-labeled data. This multimodal fusion enhances the model’s ability to generalize across different character styles, motion patterns, and dialogue contexts. To further increase diversity and robustness, 20% of the training relies solely on text-to-video data without accompanying audio.

The training corpus combines proprietary and public datasets with varying levels of annotation quality. MoCha’s performance was evaluated using a mix of automatic metrics – assessing lip-sync accuracy and motion quality – and human studies focusing on realism, expressiveness, and overall user preference.

All models are trained using a spatial resolution close to 720×720, with support for multiple aspect ratios. Each model is optimized to produce 128-frame videos at 24 fps, resulting in outputs of approximately 5.3 seconds in length.

The models require two NVIDIA A-100 80GB memory models for inference, depending on the number of parameters (30B), without quantization (PF32) and with a 20% overhead factor.

Evaluation framework

For the evaluation of the model, they create a MoCha bench for the talking characters generation task and consist of five human evaluation scores from 1 to 4.

- Lip Sync Quality: measures the synchronization between the spoken audio and the character’s lip movements.

- Facial Expression Naturalness: evaluates facial expressions in relation to the given prompt and speech content.

- Action Naturalness: evaluates body movements according to gestures, speaking, and prompting.

- Text Alignment: verify that context and actions align with the prompt.

- Visual Quality: search for artifacts, discontinuities, or glitches.

Related works

If you want to learn more about AI-generated character video-whether for talking avatars, animated storytelling, or controllable video synthesis-there is a growing body of research worth exploring.

MagicInfinite aims to produce high-fidelity talking videos with various types of single or multiple full-body characters, but with an emphasis on facial poses. It also uses a diffusion transform with 3D full attention mechanisms and a sliding window denoising strategy and incorporates a two-step curriculum learning scheme, integrating audio for lip synchronization, text for expressive dynamics, and reference images for identity preservation. Uses a region-specific mask to precise speaker identification in multi-character scenes. It can generate a 10-second 540x540p video in 10 seconds or 720x720p in 30 seconds on 8 H100 GPUs with no loss of quality.

Result video from MagicInfinite project page

Teller focuses on creating high-fidelity talking head videos by disentangling motion from appearance through a two-stage framework: an Audio-To-Motion (AToM) model for synchronized lip movements and a Motion-To-Video (MToV) model for producing detailed head videos. While MoDiTalker excels at capturing subtle facial expressions and ensuring temporal consistency, MoCha extends beyond talking heads to full-body character animation and multi-character interactions, addressing a broader range of storytelling elements.

Result video from Teller project page

ChatAnyone targets the real-time generation of stylized portrait video, ranging from talking heads to upper body interactions. It emphasizes expressive facial expressions and synchronized body movements, including hand gestures, to enhance interactive video chats. ChatAnyone uses hierarchical motion diffusion models that incorporate both explicit and implicit motion representations based on audio inputs. Explicit hand control signals are integrated into the generator to produce detailed hand gestures, enhancing the overall realism and expressiveness of the portrait videos.

Final Thoughts

With MoCha, we’re witnessing a significant leap toward making AI-generated characters more cinematic, expressive, and context-aware. Bridging the gap between text-to-video diffusion models and voice-driven animation, MoCha introduces a new paradigm for full-body, multi-character storytelling. Its ability to synchronize complex audio cues with facial expressions, body motion, and scene-level narrative makes it a versatile tool for creators across industries. The potential of MoCha’s AI talking characters to revolutionize fields from filmmaking to virtual agents is immense.

Unlike previous systems that focus on either facial realism or basic body gestures, MoCha’s unified diffusion transformer brings these dimensions together to generate video clips that are not only visually rich, but also emotionally resonant and contextually aligned with the narrative prompt.

As AI-generated video content becomes more prevalent, tools like MoCha show that we’re not far from having natural, dynamic digital actors who can read a script and perform it convincingly. However, it’s currently limited to a duration of 5.3 seconds.

MoCha code and weights will be released by Meta soon.

References

Calculating GPU memory formula

MoCha: Towards Movie-Grade Talking Character Synthesis

MagicInfinite: Generating Infinite Talking Videos with Your Words and Voice

ChatAnyone: Stylized Real-time Portrait Video Generation with Hierarchical Motion Diffusion Model

Teller: Real-Time Streaming Audio-Driven Portrait Animation with Autoregressive Motion Generation