From zero to NeRF: what to expect data-wise on a NeRF project

By Nahuel Garcia

In this follow-up to our initial exploration of NeRF (Neural Radiance Fields), we’ll dive deeper into the essential aspects of data preparation and management for utilizing this innovative technology. Additionally, we’ll highlight a selection of practical tools that can aid you in your NeRF journey, enabling you to better understand and apply its capabilities.

Recap on NeRF

In the previous article in this series we talked about:

- What are NeRFs: A state-of-the-art Deep Learning architecture used for synthesizing 3D volumes from 2D images.

- Classic vs model-based approaches: The “classic” approach requires training a separate model for each scene, while more recent model-based approaches aim to generalize across multiple similar scenes via pretraining.

- Key terminology: what we mean by a scene, a view, rendering, the camera position, volume rendering, and view synthesis.

- Review of recent research: Including scene-based approaches (NeROIC, InstantNeRF) and model-based approaches (PixelNeRF, LOLNeRF).

- Challenges include the trade-offs between scene-based and model-based approaches, such as rendering quality, training time, and required data.

For a more in-depth review on the capabilities of NeRFs and the key terminology, please review our previous entry in this series.

The data

Most Nerf datasets have different scenes with two key components:

- Images – multiple views of the same scene from different angles

- Camera positional and angular information for each image

However, the datasets that we have found tend to differ on how this information is presented, specially related to the position of the camera because there are several ways of referring to it: either through intrinsic coordinates on .txt files, rotation matrices on .json or .npz (numpy) files, or they can even have more scene-specific data such as “camera_A, camera_B, …camera_C” associated to the camera disposition in the data collection process. So be careful when choosing a dataset and model, you might need to transform the data from one format to the other to make this work.

In the tools section we will be introducing a few useful apps that use only a video or images of the scene to render it, so we don’t have to worry about formats, camera poses, etc.

Datasets:

There are two broad categories of datasets when it comes to NeRFs: Synthetic datasets, mostly created by taking “snapshots” of 3D models of an object, and real-world datasets.

Synthetic datasets:

- ShapeNet: A dataset consisting of thousands of 3D models in low resolution (64×64 pixels) corresponding to more than 200 classes of common objects.

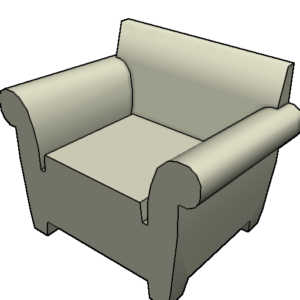

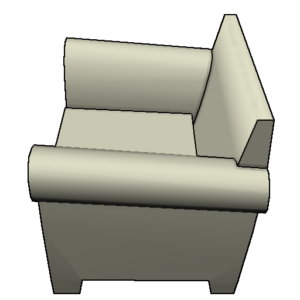

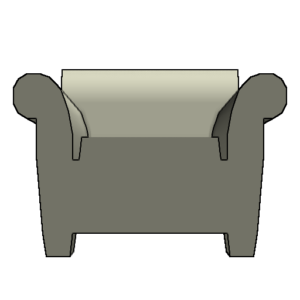

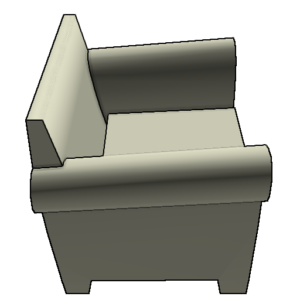

- MobileBrick: The authors propose a novel data capturing and 3D annotation pipeline to obtain precise 3D ground-truth shapes without relying on expensive 3D scanners. The key to creating the precise 3D ground-truth shapes is using LEGO models, which are made of LEGO bricks with known geometry. This dataset contains two distinctive features:

- A large number of RGBD sequences with precise 3D ground-truth annotations.

- The RGBD images were captured using mobile devices so algorithms can be tested in a realistic setup for mobile AR applications.

Real-world datasets:

DTU MVS 2014: A multi-view stereo dataset. Specifically, it contains 80 scenes of large variability. Each scene consists of 49 or 64 accurate camera positions and reference structured light scans, all acquired by a 6-axis industrial robot.

Useful tools

In this section we will be reviewing the tools that you will need when embarking on a NeRF project. This includes tools for rendering scenes from 3D models, a tool to extract approximate camera coordinates from different pictures of a scene, and various tools to create 3D models from pictures/video of a scene using the classic approach.

Blender

- : Blender is a powerful and versatile 3D creation software that can be used to create high-quality digital models and animations. Its capabilities include advanced modeling tools for creating complex geometries, animation tools for creating lifelike movements and interactions. It also has a flexible rendering engine that can produce photorealistic images and animations. It has two main uses when working with NeRFs:

-

- For 3D models visualization and rendering

- When using 3D based datasets, to collect several captures from different known angles on the same scene. We can use this to build a dataset for training a model.

COLMAP

COLMAP (short for “Structure-from-Motion and Multi-View Stereo Pipeline”) is a free and open-source software package for photogrammetry. It can be used to create 3D models from collections of 2D images, and can handle a variety of input types. These inputs include single images, image sequences, and video footage.

It is designed to perform three main tasks:

- Feature detection and matching: Identifying corresponding points and using them to reconstruct the 3D geometry of the scene.

- Geometric reconstruction: estimating the camera parameters and the 3D positions of the points in the scene.

- Texture mapping: used to apply color information from the input images to the 3D model.

When we talk about COLMAP in this series we are mainly talking about geometric reconstruction. Generally we need to estimate the camera parameters, which can be done using COLMAP directly. But you generally don’t need to use it manually, as it is implemented in other methods.

NerfStudio

NerfStudio is a versatile open-source tool designed for working with NeRF (Neural Radiance Fields) projects. It provides users with a fast and efficient solution for rendering scenes using NeRFs, making it an ideal choice for those with access to robust hardware. Adaptable to various use cases, NerfStudio is a popular choice for users seeking a customizable and accessible platform for their NeRF-related projects.

- Pros:

- Open source

- Fast (Depending on the hardware, it can render a scene in a few minutes at most)

- Free

- Provides a variety of models to render scenes

- Cons:

- Hard to setup

- Requires access to good hardware, particularly GPUs

LumaLabs

LumaLabs is a promising tool for those who are interested in starting to play with NeRFs, as it allows users to easily create 3D models from videos or images without needing to have specialized knowledge or experience in this field. There is also an API available, which is a convenient way to integrate 3D modeling into your own workflows. However, as a proprietary software, its functionality and pricing may be subject to change at the discretion of its developers. Also take into account it is not open-source, which makes it harder to adapt to a particular use case. For now, you get access to a few free renders as a new user.

- Pros:

- Easy to use

- A few free renders for new users

- Cons:

- Slow (up to one hour for a scene)

- Proprietary software

Using this tool we rendered our own scene, it took around one hour to render using the free version. We only needed a video from the scene taken from different angles, the app took care of everything else, including removing the background (optional). Here is the final result:

The future of NeRF

As we conclude this series, we would like to mention some new approaches that could catch your interest. Several models have incorporated text alongside NeRF models. Two notable examples in this line include LeRF, which combines CLIP and NeRF to enable image querying for specific objects using text, and Instruct-NeRF2NeRF, which employs instructPix2Pix to edit NeRF scenes with text prompts.

With the recent surge in generative models, particularly diffusion models, it comes as no surprise that some approaches have integrated diffusion into their processes. DreamFusion, an intriguing method, utilizes a pretrained 2D text-to-image diffusion model to perform text-to-3D synthesis. Another approach, Zero-1-to-3, allows for the reconstruction of novel camera views of an object using just a single image. You can also use this method to train a NeRF for 3D reconstruction.

Final thoughts

Throughout this series, we have examined state-of-the-art NeRF approaches and the top tools for rendering scenes using traditional methods. The optimal approach will largely depend on your specific use case. If you have access to robust hardware and are looking for a fast, open-source tool that can be customized to your needs, NerfStudio is likely your best option. On the other hand, if you prefer a user-friendly solution and are willing to pay a fee per render, LumaLabs may be the ideal choice.