What is Artificial Intelligence and why now?

By Rodrigo Beceiro

To understand what is Artificial Intelligence or AI we first need to be on the same page about what is to be intelligent? Intelligence is defined as the ability of the mind to learn, understand, reason, take decisions and to form an idea of a given reality.

The following question we could ask ourselves is what’s the most intelligent creature there is out there?The answer is simple: humans. They have the ability to perform several different complex tasks that we will want computers to have as well.

Oral communication is one of those complex tasks: We listen and talk, we communicate using an oral language. In AI, the subfield that copies how humans communicate orally is called Speech recognition. This is what Siri, Alexa or Google Home do in order to understand our requests.

Also, you are actually reading this article… so you are communicating with me (the writer) by means of a written language. I write and you read, and you can even write back and I would be able to decode your message. This is achieved by the field of Natural Language Processing or NLP. It is sometimes also referred to as Natural Language Understanding or NLU.

Also, by looking at the pictures above, you can tell there is a sky in the first one and it doesn’t take much to realise that the second one has palm trees and is probably a beach. Computers can come to the same conclusions thanks to the field of Computer Vision, which is also a subfield of AI.

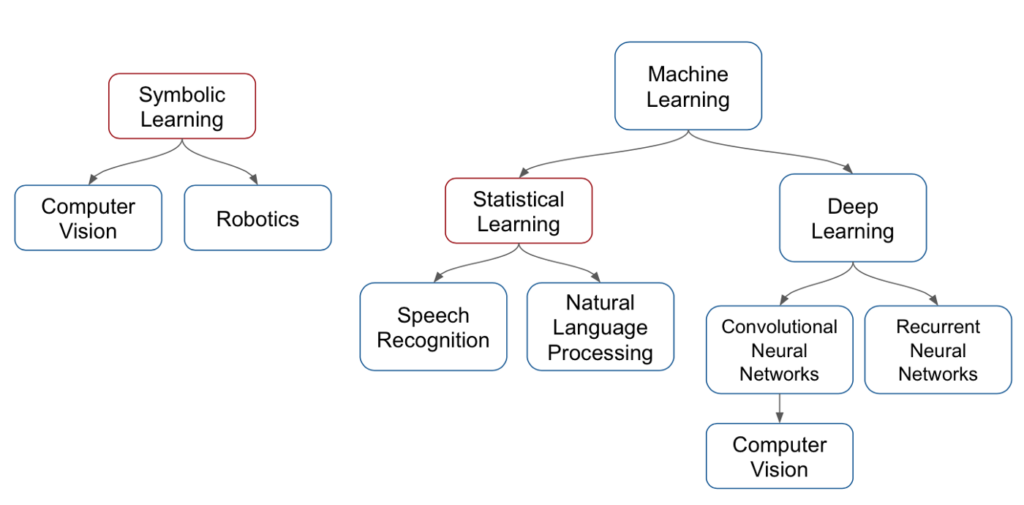

Computer vision is part of what is called Symbolic Learning while traditionally Speech Recognition and NLP are part of Statistical learning.

Humans also move around and are able to interact with the environment. Computers do this thanks to robotics. Robotics is another subfield of AI. Actually, strictly speaking, robotics includes a lot of other fields such as physics and electronics which are not actually AI. Self driving cars and other autonomous vehicles are a part of robotics and robotics is also part of Symbolic learning we mentioned earlier.

Humans are also able of recognizing patterns. If a given company sold $1,000 three years ago, $2,000 two years ago and $3,000 last year you’ll probably be able to deduct that it will sell $4,000 this year with a certain degree of confidence. Computer can see this pattern too and they will also be able to do predictions based on what they’ve learnt. What is more, while humans are able to work easily with two or three dimensions at the same time, computers are able to do so with hundreds or thousands at the same time so they actually outperform us in pattern recognition. The field of AI which studies pattern recognition is called Machine Learning and it is used to solve two different kinds of problems:

- Classification

- Prediction

Difference between them is subtle but if for example you are trying to understand if a customer is actually a VIP or not then you are classifying. If you are trying to understand if a given customer is likely or not to buy from a competitor, then you are predicting.

So, now we know that if we teach a computer to replicate different tasks humans do that make us smart, then we would have created an Artificial Intelligence system. But how are we going to teach this to computers? Well, a good starting point is to understand how humans learn. We learn by using our brain which can be seen as a network of neurons. If we could replicate the structure and/or functionality of the brain, we would have taught the computer to be smart. This approach to teaching computers is called Deep Learning and it is actually a subfield of Machine Learning.

Deep Learning is a big field inside AI and it can be divided into specific tasks. For example, if you see these parts of an image:

You can deduct that there’s a sun but it will be harder for you to tell that there’s also a sandhill there. That’s because some of the spatial information was lost when the image got split into pieces. If neurons use spatial information to identify patterns or solve classification problems, then they are called convolutional neural networks and are a subfield of Deep Learning. They are a different approach to solving Computer Vision tasks.

We humans also have the ability to remember stuff. For example, when will you pay your next visit to the doctor. We also have specialized neural networks for this. They are called recurrent neural networks.

So, summing up AI can be divided as follows:

It is important to point out that we can build many specific AI applications but not a general AI application (not yet at least). This means that we can build an applications which predicts a company’s stock (a specific AI application), and another one that decodes voice and so on, but Terminator (general AI) is still only Science Fiction.

We mentioned earlier Statistical learning and Symbolic learning. Symbolic learning means you teach the computer symbols humans can understand while statistical learning means you just feed it a lot of information and the computer decides by itself what is important for making a decision and what’s not. For example, if you tell a self-driving car to stop when it sees a red sign at the side of the road with the word “Stop” on them written in white, that’s symbolic learning. On the other hand, if you show the self-driving car a lot of hours of videos of cars stopping when a Stop signs appear that’s statistical learning.

Why now?

Ok, AI is great, we can agree to that. But why all the fuzz in the recent years? Specially given that AI has existed for a long while. It has been around since the 1950’s. Turing actually proposed its famous Turing test in 1950 and the term “artificial intelligence” was first used at a workshop at Dartmouth College in 1956. Some of the attendants there were actually given millions of dollars to create a machine as intelligent as a human but couldn’t achieve it at that time. That and other initiatives eventually run out of money and the first “AI winter” came. That was around the 1970s and it lasted until the 1980s when the japanese government poured money into research. A new “AI summer” had arrived and it lasted for several years until initiatives once more run out of money: another winter came. That winter lasted until the 2000’s when yet another summer came. This time big companies such as Google, Facebook, Baidu and others are the ones putting money into research (sounds like a deja vu right?). But how to know if this is not just another summer of if this time AI is here for good?

In AI you use a lot of math theory to come up with algorithms that are fed with data and run on a given hardware for you to output a decision. The big difference this time is the hardware. Hardware advances now allow us to run much more complex algorithms in reasonable times as well as save tons of data that were unthink of before. All of this combined, allows us to build applications that achieve good results in reasonable amounts of time.

Imagine if I ask Alexa or Siri to put a timer while I’m cooking, I will want an immediate response. If it takes Alexa 10 minutes to decode “put a timer for 5 minutes” then it’s no good. Instead of this, my voice is decoded immediately and a response is given in a matter of milliseconds.

At Marvik.ai we think that there’s no winter coming anytime soon because hardware has gotten to a point where we are able to build lots of commercial use applications. AI is just starting.