By Octavio Deshays

What is Text-to-Speech and why does it matter in 2025?

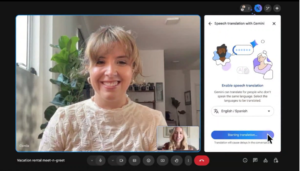

In recent weeks, you may have heard about Google Meet’s impressive new translation feature. As you speak, the other person receives audio in their own language. This innovation, along with others, such as the voice-enabled version of ChatGPT, are powered by text-to-speech for voice chat applications, a rapidly evolving field at the heart of modern communication tools.

Text-to-Speech (TTS) technology has evolved significantly, transforming written text into spoken language with remarkable realism. It plays a crucial role in voice chat applications by enabling real-time communication and enhancing user experience in multiple ways. Modern TTS systems support personalized voices and emotional tones, making interactions more engaging and human-like. Additionally, TTS improves accessibility by making digital content available to individuals with visual impairments or reading difficulties. As reliance on voice-based interfaces continues to grow, TTS is becoming an essential component of innovative and user-friendly applications.

In this blog, we’ll take a deep dive into the current TTS landscape, tracing its evolution and examining today’s most advanced models across both open-source and commercial offerings. We’ll compare their capabilities, trade-offs and deployment options, with a particular focus on real-time use cases like voice chat. Also, we will explore one of the most important yet often overlooked aspects: deploying TTS on edge devices, where limited resources can make certain models unviable. By the end, you’ll have a clear understanding of the state-of-the-art in TTS, the strengths and limitations of leading models and practical insights into choosing the right solution for your own voice-based applications, whether you’re building in the cloud, on an edge device or somewhere in between.

The evolution of Text-to-Speech systems

Text-to-Speech technology has undergone a remarkable transformation, evolving from basic methods that produced robotic-sounding speech to advanced neural systems capable of generating highly natural and expressive voices. This evolution can be divided into different phases, each characterized by distinct methodologies and technological advancements.

The earliest TTS systems relied on concatenative synthesis, where speech was produced by selecting and concatenating pre-recorded segments (such as phonemes or syllables) stored in a large database. Later, parametric synthesis emerged as a more flexible approach, generating speech based on phonetic parameters like pitch, duration and intonation. These models converted text into phonetic representations and dynamically synthesized speech, although with limitations in naturalness and expressiveness.

The advent of neural networks revolutionized TTS by enabling models to learn directly from large datasets, capturing the subtle nuances of human speech including intonation, rhythm and emotion. One of the first groundbreaking neural TTS models was WaveNet, introduced by DeepMind in 2016 (seems ages ago in AI time). WaveNet generated raw audio sample by sample, producing speech that mimics the human voice far more naturally than its predecessors.

This breakthrough, together with other Deep Learning models like Tacotron2 from Nvidia and the posterior adoption of the Transformer architecture, first introduced in the Transformer TTS model, laid the foundation for today’s state-of-the-art TTS models, which continue to build on these advancements to deliver even more realistic and expressive synthetic speech.

In the following audios, you can listen to the progress of TTS generation:

Concatenative synthesis

Deep learning model Tacotron 2

State-of-the-art model: OpenAI API

State-of-the-art TTS models

The field of Text-to-Speech has reached unprecedented levels of sophistication, with today’s leading models delivering levels of realism and flexibility once thought impossible. Whether you need a model for real-time translation, voice assistants or creative applications, there’s no shortage of options. But with so many choices, it’s easy to feel overwhelmed. To help you find the best fit for your project, it’s important to focus on a few key factors when comparing models.

Key aspects of a TTS model

Open vs closed source

Open-source models offer transparency, customization and self-hosting capabilities, which are important if you work with sensitive personal information. Closed-source APIs offer ease of use and scalability but less flexibility and control over the underlying model. An unexpected change on a model by the provider may impact your application.

How much control do you need?

Some models allow fine-grained control over emotion, intonation and speaking style through parameters or tags, like Chatterbox’s emotion exaggeration parameter or OpenAI’s language style prompts, while others offer more fixed voice outputs.

Real-time performance (Latency)

For applications like voice chat or live translation, latency matters. Models like Orpheus and Chatterbox provide latency arround 200ms, which make them suitable for real time use cases. OpenAI (~500ms) and ElevenLabs (~400ms) also provide low-latency outputs suitable for real-time use cases.

Integration complexity and deployment flexibility.

Open-source models like Orpheus, Dia and Chatterbox offer flexible deployment, either locally or in the cloud. However, they require significant GPU resources (e.g., Chatterbox ~6.5 GB VRAM, Orpheus ~12 GB, Dia ~10 GB) and more setup effort. Closed-source APIs like ElevenLabs and OpenAI remove the need for dedicated hardware and offer plug-and-play integration, but with less control over deployment.

Voice cloning capabilities.

Voice cloning is a key feature to consider. It lets you personalize and control the voices used in your application. If customization is a priority, you should evaluate whether you need zero-shot voice cloning or custom voice creation. Open models like Orpheus and Chatterbox support voice cloning from minimal audio, while OpenAI currently does not offer cloning and ElevenLabs provides advanced cloning features.

Multilingual support.

If you need support for multiple languages, ElevenLabs and OpenAI provide robust multilingual capabilities out-of-the-box, while open-source models are often Englishfirst but can be fine-tuned for other languages.

Performance in naturalness, expressiveness and accuracy.

High-quality TTS models are evaluated by both naturalness and accuracy. Word Error Rate (WER) measures transcription quality, while blind tests rate expressiveness and realism. Chatterbox has outperformed ElevenLabs in naturalness and CSM stands out for capturing emotional tone. When choosing a model, balance listening experience with clear and accurate output.

You should also consider the deployment environment and test the model with your own dataset. A model may perform well on general data but struggle with industry-specific terms.

Selecting the best option

With these key aspects in mind, let’s take a closer look at how the leading TTS models stack up, both open-source and closed-source, to help you find the best fit for your application.

When comparing the top open-source TTS models, Chatterbox stands out for its balance between ease of use, performance and customization. While Canopy Orpheus offers impressive human-like intonation and low-latency streaming, but it requires a heavier infrastructure, needing at least 12GB of GPU VRAM. Sesame CSM brings a strong focus on conversational dynamics, but it is more niche for dialogue-driven use cases. Dia-1.6B excels in multi-speaker dialogue and nonverbal expression but also demands substantial resources.

On the closed-source side, ElevenLabs continues to lead with its highly realistic voice generation, voice cloning and advanced AI dubbing features. It supports 29 languages and provides fast API response times (~400ms), making it a go-to option for companies needing scalable, multilingual voice solutions. OpenAI’s Audio API is also a strong player, offering expressive, low-latency outputs and easy style control via natural language prompts. However, it lacks voice cloning, which limits customization for applications where brand or user personalization is key.

Considering all these aspects, from control over speech style and emotion, real-time performance, deployment flexibility, multilingual support and voice cloning capabilities. Chatterbox emerges as the best open-source alternative, as it delivers high-quality, expressive speech with emotion control, zero-shot voice cloning and perceptual watermarking, all while maintaining relatively low VRAM requirements (~6.5 GB). In blind tests, listeners even preferred its outputs over ElevenLabs. You can try it yourself in its easy to use Hugging Face demo. For those seeking a closed-source, ready-to-use solution with strong multilingual and cloning capabilities, ElevenLabs remains the top choice.

Always test models with your own data and vocabulary to ensure the best results. Below, you can hear Chatterbox’s remarkable zero-shot voice cloning using just a short reference audio:

Audio of reference voice

Chatterbox TTS output

Edge deployment of TTS models for voice chat applications

In the previous section, we explored the current state-of-the-art TTS models, both open and closed source. However, deploying these models on edge devices, such as: phones, embedded systems or offline assistants, requires careful reconsideration of your options. Edge environments present unique constraints that differ significantly from cloud-based deployments.

First and foremost, edge devices operate under strict resource limitations. Unlike the cloud, edge environments have fixed limits on memory, compute power and energy. Upgrades and scaling are not as easy to manage. This makes model efficiency a critical factor.

Moreover, TTS is only one component of a complete voice chat system. As shown in this figure, a typical pipeline includes:

- Speech-to-Text to transcribe the user’s voice,

- A Large Language Model (LLM) to interpret and generate a response,

- Text-to-Speech to synthesize the reply into audio.

Even with recent progress in lightweight LLMs through techniques like model distillation and quantization, the language model remains the most resource-intensive component. As a result, only a small portion of compute resources can go to TTS. This makes it hard to run high-quality models on edge devices.

Let’s evaluate the options discussed earlier in light of these constraints:

- Closed-source APIs (e.g., ElevenLabs) need constant internet access and external servers. These conditions are often not available or not ideal, for edge deployment.

- Open-source state-of-the-art models such as Canopy Orpheus and Sesame CSM offer exceptional voice quality but are too resource-intensive. Orpheus, for instance, needs at least 12GB of GPU VRAM. CSM and Chatterbox require around 6GB, which exceeds what’s typically available on edge hardware.

This leads us to consider alternatives optimized for edge deployment, even if they sacrifice some fidelity in voice quality. One such model is Kororo TTS, a lightweight open-source TTS system with just 82 million parameters. It requires just 1.5GB of GPU VRAM and is fast enough for real-time inference. Though it doesn’t match the expressiveness of Orpheus or CSM, it delivers natural-sounding speech suitable for conversational applications. Additionally, it comes with an open library and over 20 pre-configured voice styles, making it developer-friendly and easy to integrate.

In summary, leading TTS models excel in realism and emotional nuance. However, their high resource demands make them impractical for edge deployment. Kororo TTS offers a compelling alternative, striking a balance between performance, resource efficiency and usability. For voice chat applications running on edge devices, it stands out as a practical and reliable choice.

Conclusion

By now, you should have a clear picture of the current Text-to-Speech landscape. Models like Canopy Orpheus, Sesame CSM and Chatterbox are setting new standards for naturalness, emotion and expressiveness in this fast-moving field.

Choosing the right solution depends entirely on your needs and constraints. Here’s a quick guide to help you decide:

- For edge devices without stable internet access, lightweight models like Kororo TTS are a solid choice. Alternatively, you can use quantized versions of larger models for local inference.

- If your top priority is voice quality and you have access to a GPU or cloud infrastructure, choose high-fidelity open-source models like Chatterbox, CSM or Orpheus, with Chatterbox being the best option if you need to do voice clonning.

- If you need broad multilingual support and want to minimize engineering overhead, closed APIs like OpenAI Audio API or ElevenLabs offer ready-to-use solutions with impressive language coverage and emotional control.

Finally, keep in mind that the TTS field is evolving at a rapid pace. Solutions that are state-of-the-art today may be replaced or surpassed in a matter of weeks. Advancements in distillation, quantization and training efficiency are making TTS deployment easier across platforms. These improvements bring high-quality voice synthesis to everything from mobile apps to embedded systems.